Infrastructure - Diving deep on Obol

Decentralising the internet bond through Distributed Validator Technology

The Ethereum merge is right around the corner (Q3), it’s a huge step for the space moving the blockchain from proof of work (POW) to proof of stake (POS).

Co-founder of Obol, Collin Myers, alongside Coinbase Cloud’s Mara Schmiedt have dubbed ETH2; The Internet Bond, due to the value accrual to ETH the asset. The guys at Bankless wrote about it here.

With that said, there are some concerning issues that the team at Obol are trying to address.

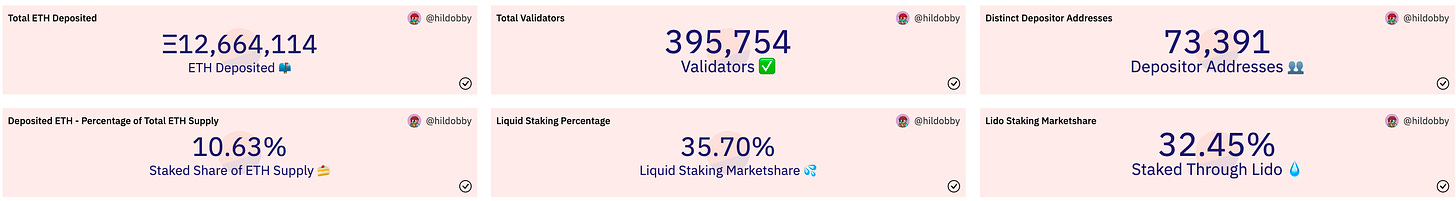

The Merge Data

Just over 10% of ETH is now staked on the beacon chain.

Stake Centralisation has been on the up, with Lido (liquid staking), exchanges and staking as a service companies taking the majority share.

These entities control a huge subset of validators. This is a problem because it introduces central points of failure. What if Lido was to incur a hack. What if an entity exhibited a DDoS attack on Kraken knocking their servers offline resulting in their validators getting slashed.

Or what if the above entities could group together and induce a fork, collectively controlling our precious internet bond.

These are the things that kept Obol co-founder Oisín Kyne up at night. He wrote about it here in 2018.

Out of these fears, Obol was born and the wheels of DVT were set in motion.

Before we go on I felt it was important to cover a few basic concepts in POS Ethereum:

What is a node?

One of the best explanations I could find was on the Ethereum.org website :

Ethereum is a distributed network of computers running software (known as nodes) that can verify blocks and transaction data. You need an application, known as a client, on your computer to "run" a node.

Running a node allows you to trustlessly and privately use Ethereum while supporting the ecosystem.

There are three types of nodes:

Light, full and archive. They each consume data differently. Some are huge (Terabytes), wile some are… light (less than 400MB)

What is a Client?

A piece of software that downloads a copy of the Ethereum blockchain and verifies the validity of every block, then keeps it up-to-date with new blocks and transactions, and helps others download and update their own copies.

Each client is written in a different programming language (e,g, Go, Java). They are open source and are managed by the Ethereum community. When running a node the choice of client should be based on what suits you best, based on the level of security, available data, and cost to run. To take it a step further, you can choose to run a validator.

To participate as a validator, a user must deposit 32 ETH into the deposit contract and run three separate pieces of software: an execution client, a consensus client (as described above), and a validator.

Execution client (formerly ETH1 clients), currently running on mainnet and testnets.

An execution client specialises in running the EVM and managing the transaction pool for the Ethereum network. These clients provide execution payloads to consensus clients for inclusion into blocks.

Examples include:

Geth, Nethermind, Besu and Erigon.

Consensus client (formerly ETH2 clients):

A consensus client's duty is to run the proof of stake consensus layer of Ethereum, often referred to as the beacon chain.

Examples include:

Teku, Nimbus, Lighthouse, Lodestar, Prysm.

What is a Validator?

These can be described as the “new miners” in POS Ethereum. To expand:

A consensus mechanism is a set of rules and incentives that enable nodes to come to agreement about the state of the Ethereum network. Currently, POW is the consensus mechanism for the Ethereum blockchain.

In proof-of-work, miners prove they have capital at risk by expending energy. In proof-of-stake, validators explicitly stake capital in the form of ether into a smart contract on Ethereum. This staked ether then acts as collateral that can be destroyed if the validator behaves dishonestly or lazily (slashing). The validator is then responsible for checking that new blocks propagated over the network are valid and occasionally creating and propagating new blocks themselves.

Validator Client

A validator client is a piece of code that operates one or more Ethereum validators.

Examples include:

Vouch, Prysm, Teku, Lighthouse.

Distributed Validator Technology

The above process to run a validator is leading to centralisation among a few select parties identified at the start of this article. This is not a flaw as Collin Myers has stated. It’s merely that the process “is simply reaching the end of its software cycle and requires iteration to enter its next software cycle”.

DVT is that iteration.

DVT enables a new kind of validator, one that runs across multiple machines and clients simultaneously but behaves like a single validator to the network.

This effectively spreads risk and allows for not only more winners across the board, but further decentralisation.

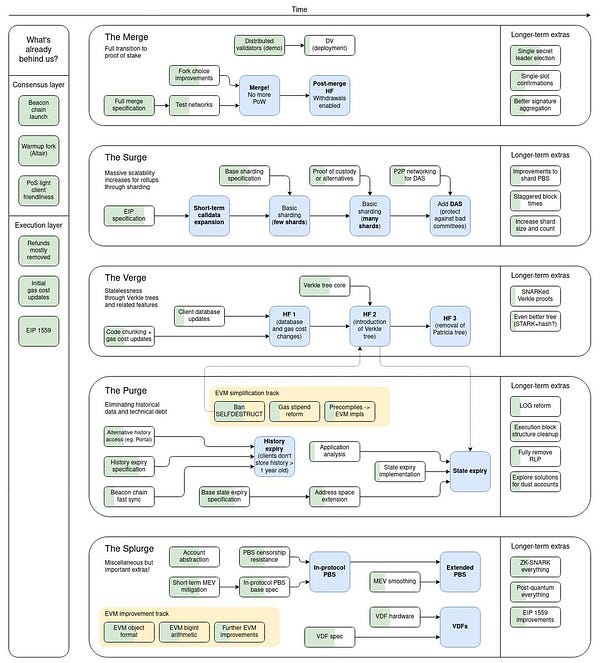

Let’s start by setting how important this is to Vitalik and the EF;

note: it’s top of the roadmap:

What’s wrong with the current iteration of validators?

I believe the above quote quote really sums up the situation individual validators are in with the current POS climate.

There are two issues that come to mind, assuming you have both the capital to stake and the hardware to run the software; they include:

Availability failure - where your internet or server drops offline resulting in an “inactivity penalty”. A further more severe penalty can occur if your collateral gets penalised below 16ETH, this is known as slashing and will kick you out of the network for over a month.

Validating key compromise - “Not your keys not your coins”. Many have been compromised in the past and this area has always been a weakness in the system. Obol believes that:

-customers cannot be trusted to securely custody a validator hot key.

-leaving encrypted private keys on chain makes the validators using them vulnerable. If the encryption key leaks, all of those shares can be decrypted.

Both of the above combined can push operators into more centralised solutions, and that’s exactly what’s happening today.

Current solutions:

Active/Passive redundancy

Having two independent validator systems that share the same private key that are meant to operate at different times.

You effectively run a back up to mitigate penalties from going offline. If one sever goes down, the other comes on. This risks double-signing on the occasion that both validators are online at the same time. The punishment for this is severe.

What’s more is that you have two vectors for private key exposure, doubling your key risk.

There isn’t much room for error, particularly for small operators.

Obol’s solution:

Active/Active fault tolerance

DVT enables a new kind of validator, one that runs across multiple machines and clients simultaneously but behaves like a single validator to the network. This enables your validator to stay online even if a subset of the machines fail, this is called Active/Active fault tolerance. Think of it like engines on a plane, they all work together to fly the plane, but if one fails, the plane isn't doomed.

In addition, this new infrastructure primitive enables a validator key to be split between independently operating validator instances.

With a divided validating key, the entire private key for a validator never exists anywhere and is only simulated between a group of instances each holding a subset of the key.

Whats more, operators can customise their staking cluster:

Pulling it all together

This is where Obol would operate in the stack:

These distributed validators connect to various consensus layer and execution layer clients forming a truly credible neutral layer:

For me, the below quote from the team is the best way to sum up where Ethereum staking is headed and why Obol is so important:

Without this layer, highly available uptime will continue to be a moat and stake will accumulate amongst a few products.

If Obol can get their tech and incentives right, I believe this can be a real game changer for the further decentralisation of the Ethereum ecosystem.

As Oisín has put it:

My belief is if we can remove the single point of failure in validator operation, we can place more trust in smaller node operators.

If we can share risk, we can share stake. If we want to solve the staking problem, we need to make Ethereum staking safe and profitable for groups of humans together.

This is coordination technology after all.

A winning strategy

Obol have teamed up with a lot of the “staking as a service” providers with the goal of fully integrating their credibly neutral layer. The launch of “Charon” v1 will focus on these trusted parties. While v2 will move to the realm of fully trustless distributed validators.

Last year they received a grant from the Lido DAO to improve decentralisation. A strong partnership here could lead to true decentralisation of Lido’s validators.

This is where co-founder Collin Myers can really stand out. He’s a business development specialist and this is the area where if Obol are to succeed, need to excel. Partnering and integrating with any and all staking services is the end goal.

Obol already plan for frictionless migration from existing validator clients.

Conclusion

Staking, in its current form has many problems, from centralisation, maintaining an “online” machine, to loss of keys.

Obol aims to solve these problems and become the de-facto multi operator validator network.